The Quick Read

Agnostic AI means you can swap models, clouds, and tools without costly rewrites. It cuts lock-in risk, keeps costs predictable, and makes compliance easier. This guide shows you how to design the architecture, prove value with a PoC, scale with data engineering and predictive analytics, and keep security and governance tight—all in plain, practical steps.

What is Agnostic AI?

Agnostic AI, or more broadly, agnostic in an IT context, refers to the ability of something to function without requiring or relying on the specific underlying details of the system it is operating within.

In the context of AI, this generally means the design and deployment of AI systems that are not confined to specific platforms, hardware, software frameworks, or even specific large language models (LLMs).

Essentially, an agnostic AI system is designed to be flexible and adaptable, able to operate across diverse environments and integrate with various technologies without being tied to a single vendor or proprietary solution.

Key Aspects and Benefits of Agnostic AI

- Flexibility: Agnostic AI allows organizations to adapt to and incorporate new technologies as they emerge, ensuring the longevity and relevance of their AI systems.

- Interoperability: It facilitates seamless communication and collaboration between different AI solutions and existing systems within an organization’s ecosystem.

- Innovation: By not being limited to specific technologies, developers can experiment with and leverage the most advanced tools available, fostering continuous innovation.

- Cost-effectiveness: According to AI Business, an agnostic approach allows businesses to choose and utilize LLMs strategically, potentially leveraging open-source models, which can be free or more affordable than commercial models. It also reduces the need for expensive and time-consuming migrations when technology evolves.

- Reduced Vendor Lock-in: Organizations avoid becoming overly dependent on a particular vendor’s technology, reducing risks associated with potential price changes, service disruptions, or strategic shifts by a single provider.

- Scalability: Agnostic AI infrastructure allows for the exchange and integration of new LLMs as they become available, ensuring the system can grow and evolve alongside the business needs.

1) Executive Snapshot: Build AI Independence, Not AI Dependence

Your board wants AI outcomes without runaway bills or lock-in regrets. You want choice: use the best model today and switch tomorrow with minimal rework. That is the promise of agnostic AI.

- Why now: Model quality changes fast. Pricing shifts. Regulations tighten. You need the power to pivot.

- What changes: You standardize the way your apps talk to models, data stores, and infra. You avoid hard wiring your stack to one vendor.

- What you gain: Cost control, faster upgrades, and lower security and compliance risk.

- What you lose: Expensive rewrites. Fragile integrations. Vendor dependency.

This guide shows you how to design an agnostic AI stack that your teams can ship, monitor, and scale—without buzzwords and with real engineering discipline.

2) The Hidden Costs of Vendor Lock-In (And How to Avoid Them)

Lock-in looks harmless at the start. You ship fast with one provider. Then features creep in. Soon your pipelines, prompts, embeddings, and monitoring all rely on one vendor’s way of doing things. Switching becomes painful.

Where lock-in bites:

- Switching costs: You must refactor prompts, APIs, data flows, and observability. Sprints go to rework, not new features.

- Cost volatility: Per-token or per-hour bills spike with usage. You have little leverage.

- Compliance pressure: Data residency, industry rules, and audits may force you to move workloads. Lock-in turns a policy change into a rebuild.

- Resilience: A single provider outage or policy change stalls your roadmap.

How agnostic AI reduces the pain:

- Use adapters and interfaces that sit between your apps and each model or tool.

- Keep prompts and system instructions in a versioned store, not scattered in code.

- Separate data pipelines from model calls.

- Keep observability and governance independent from any one vendor.

Result: you keep leverage. If a better model or price arrives, you switch with minimal code change.

3) What “Enterprise-Grade Agnostic AI” Really Means

Plain definition: Agnostic AI is a design approach where your applications can use many models, clouds, and data tools through a stable layer of contracts and APIs. You can change one piece without breaking the system.

Three pillars:

- Infrastructure Agnosticism

Run on any mix of public cloud, on-prem, or edge. Route jobs to the most sensible place: near data for privacy, near users for latency, or in cloud for burst capacity. - Model Agnosticism

Call different LLMs, vision models, and speech models through one unified interface. You standardize inputs and outputs (text, function calls, JSON) and keep model-specific quirks inside adapters. - Data Agnosticism

Work with many data sources (databases, lakes, CRMs, ERPs, logs, documents) through a consistent ingestion and governance layer. You avoid tying data quality and lineage to one AI vendor.

What this looks like in code:

- Your app talks to a Model Router (not a specific LLM SDK).

- The router picks the best model for the task based on policy (cost, latency, accuracy, region).

- Prompts and tools are managed centrally and injected at runtime.

- Telemetry, red-flags, and costs flow to the same dashboard regardless of model.

4) Architecture Blueprint: The Backbone of Agnostic AI

A simple, solid reference design:

A) Experience Layer (Where value shows up)

- Apps, bots, and internal tools

- Channels: web, mobile, chat, support systems

- Clear SLAs: latency, cost per request, PII handling

B) Intelligence Layer (Model-agnostic brain)

- Model Router & Adapters: Standardize calls to LLMs and other models

- Tooling & Functions: Retrieval, search, calculators, enterprise APIs

- Policies: Choose model by cost/latency/region/compliance; define safe-response rules

C) Data Layer (Fuel with control)

- Ingestion & Quality: Connectors for SaaS, DBs, files; dedupe and validation

- Vector & Indexes: Encodings and chunking strategies defined once, reused across models

- Catalog & Lineage: Know what data powers what answer

- Access Control: Row/column-level permissions; masking for PII/PHI

D) Orchestration & MLOps (Keep it reliable)

- Pipelines: Batch and streaming for ETL/ELT, RAG refresh, finetunes

- Experimentation: A/B test prompts, models, and retrieval strategies

- CI/CD: Version prompts, adapters, and policies; roll back fast

- Observability: Traces, latencies, token usage, failure trees, cost per feature

E) Security & Governance (More in Section 5)

- Identity & Access: SSO, scoped tokens, least privilege

- Secrets: KMS-backed, rotated often

- Audit Trails: Every request has a reason, source, result, and reviewer if needed

- Risk Controls: Abuse detection, prompt-injection guards, content rules

F) Run-Anywhere Infra

- Containers and autoscaling on cloud or on-prem

- Queueing for retries and dead-letter handling

- Caching to cut latency and cost

Design rules that keep it agnostic:

- No hard vendor types in your app code. Depend on your interfaces, not their SDKs.

- One prompt spec across models (with small adapter tweaks).

- One retrieval spec across embeddings and vector stores.

- One cost and telemetry schema across providers.

5) Compliance & Security: The Non-Negotiables

CISOs and regulators will ask three things: Where is the data? Who touched it? What controls stopped misuse? Your design should answer in seconds.

Data sovereignty

- Keep sensitive data in the right region (or on-prem).

- Route requests based on residency rules.

- Log when data leaves a region and why.

Identity, access, and least privilege

- Use SSO with strong MFA.

- Bind roles to tasks: view, label, deploy, audit.

- Never share keys in code. Rotate secrets on schedule and on incident.

Encryption and traffic rules

- Encrypt at rest and in transit.

- Use private endpoints or VPC peering where possible.

- Enforce egress policies. Block destinations you do not trust.

Auditability and explainability

- Keep human-readable logs: who asked what, which model replied, what tools ran.

- Store the prompt, context chunks, and final answer for review (subject to policy).

- Provide reason codes for decisions when the process supports it.

Abuse and prompt safety

- Sanitize inputs. Strip hidden instructions from documents.

- Set guardrails for harmful content.

- Rate-limit high-risk actions. Escalate to human when needed.

Incident playbooks

- Define steps for key exposure, model drift, and data leak.

- Revoke tokens fast. Rotate keys. Quarantine affected systems.

- Notify stakeholders and regulators as policy requires.

Compliance mapping (examples)

- GDPR/CPRA: Data minimization, residence, consent tracking

- HIPAA/PCI: Segmented environments, strict access, audit depth

- SOC 2/ISO 27001: Controls, monitoring, and change management

Build these into the platform, not just documents. If you can route by policy, you can pass audits with less drama.

6) Business Benefits: What Leaders Actually Get

1) Predictable cost

You send routine work to efficient models and burst work to cloud only when needed. You cap spend and track cost per feature.

2) Faster innovation

You try new models without a rewrite. If a newcomer beats your current choice on accuracy or price, you switch in days, not quarters.

3) Lower risk

If a provider changes pricing or terms, you have options. If new rules require regional processing, you route there with policy, not a rewrite.

4) Better governance

Security, observability, and data lineage live in your platform, not inside a vendor’s black box.

5) Happier teams

Engineers focus on features and quality instead of migration churn. Product teams test ideas faster. Legal and risk teams get cleaner answers.

7) Sector Blueprints: What Agnostic AI Looks Like in Practice

Finance

- Use an adapter to call different LLMs for KYC reviews and alert triage.

- Keep PII in-region. Use policy routing to ensure that.

- Log every decision with the documents used and the model that answered.

Outcome: Faster reviews, lower false positives, clean audits.

Healthcare

- Process notes with on-prem models when they include PHI.

- Use cloud models for non-sensitive experiments or de-identified data.

- Keep a full audit trail for every retrieval from EHR or PACS.

Outcome: Safer automation, better clinician tools, fewer privacy risks.

Retail & eCommerce

- Power search and recommendations with a model router.

- Shift to cheaper models for long-tail queries; use higher-accuracy models for high-value actions (checkout, returns).

- Refresh product embeddings on a schedule with data quality checks.

Outcome: Better conversions with clear cost control.

Manufacturing & Logistics

- Run small models at the edge for real-time checks.

- Send batch optimization to central compute at night.

- Blend sensor data, work orders, and supplier notes through one data pipeline.

Outcome: Less downtime, smarter planning, and satisfied ops teams.

8) Common Challenges (And Straightforward Fixes)

“Our stack is already tied to one vendor.”

Start by wrapping their SDK in your own interface. Migrate calls gradually. Add one more provider and prove the switch works.

“We don’t have time to build adapters.”

Begin with the top two or three use cases. Write the adapters only for them. Reuse the pattern later.

“Prompt chaos is slowing us down.”

Store prompts, versions, and metrics in one place. Add A/B testing. Retire prompts that lose.

“Security says no.”

Bring security into the platform design. Show routing by policy, end-to-end encryption, full audit logs, and incident playbooks.

“Costs are unclear.”

Track cost per feature, not just per token. Alert when a feature crosses its budget.

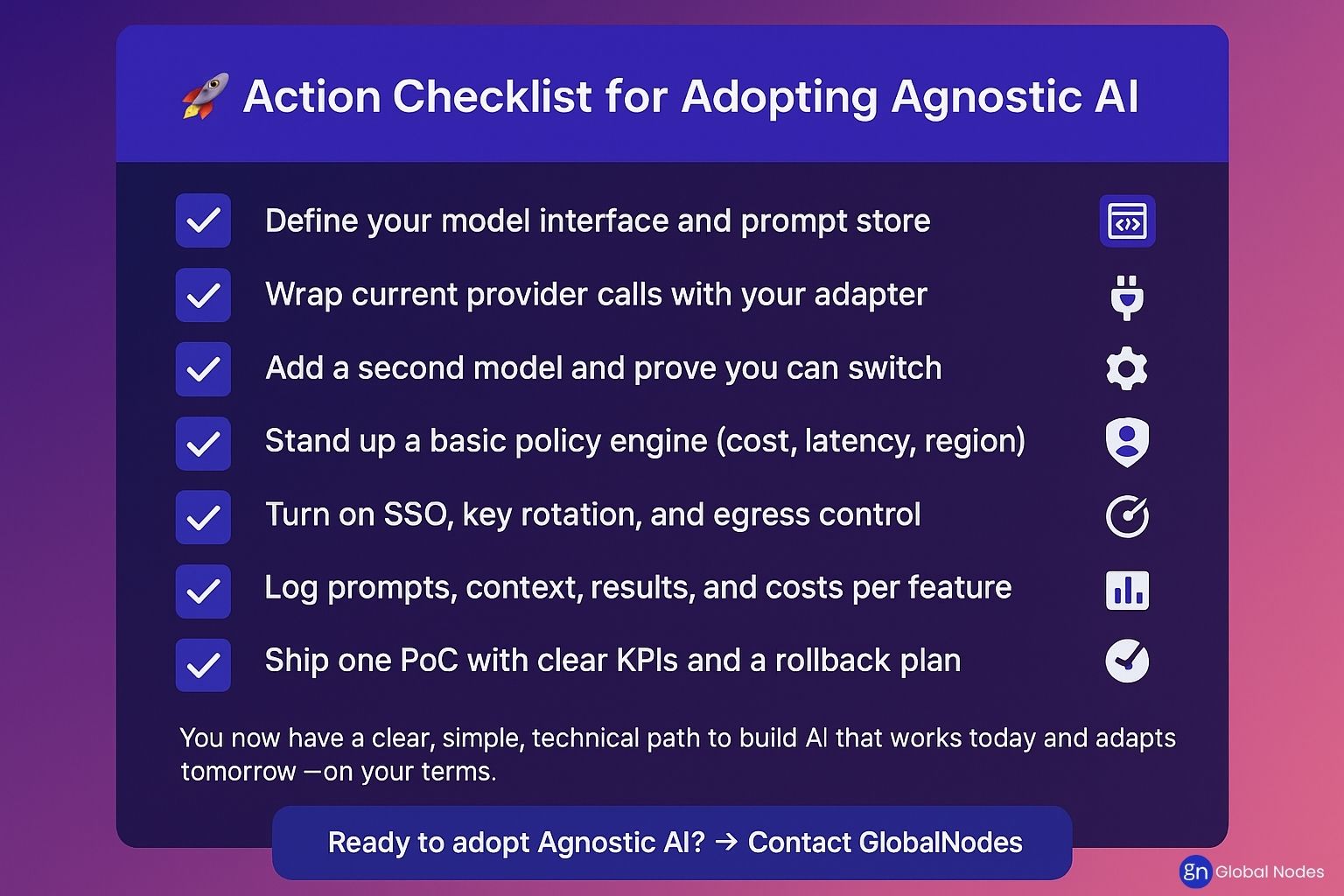

9) The Roadmap: From Audit to PoC to Scale

Step 1: Audit and plan

- Map current AI usage, data flows, and risks.

- Identify quick wins and high-risk lock-in points.

- Write simple policies: which data can leave which region, who can call which model, and what gets logged.

Step 2: Design the core interfaces

- Define your model API, retrieval API, and cost/telemetry schema.

- Choose your prompt store and your vector store.

- Decide on your policy language: cost caps, latency goals, regions, fallback order.

Step 3: Build a focused PoC

- Pick one business case with clear KPIs.

- Implement the router, two model adapters, one retrieval pipeline, and a simple dashboard.

- Prove that you can switch models without changing app code.

Step 4: Harden security and compliance

- Add SSO, least privilege, and key rotation.

- Lock down egress and set up regional routing.

- Turn on detailed audit logs and retention policies.

Step 5: Expand to production

- Add more use cases, models, and data sources.

- Automate CI/CD for prompts, adapters, and policies.

- Add continuous evaluation: quality, bias checks, and red-teaming.

Step 6: Optimize and govern

- Watch cost per feature.

- Retire poor prompts and unused adapters.

- Review incidents and update playbooks.

10) What’s Next: Stay Ready for Fast Model Cycles

The model you love today may not be the best the next quarter. Smaller models improve. Prices shift. New safety standards arrive. An agnostic platform helps you ride this wave.

Trends to watch:

- Smaller, efficient models that match or beat big ones on narrow tasks

- Function-calling and tool use as a standard pattern across providers

- Local and edge inference for privacy and low latency

- Composable AI where you chain multiple models and tools for a task

- Sustainability pressure that rewards efficient routing and right-sizing

If your platform is modular and policy-driven, you can adopt the best of each trend without a large rewrite.

11) Conclusion: Build Strategic Sovereignty Into Your AI

Agnostic AI is not a buzzword. It is an architectural choice that protects your roadmap. You design a stable interface, keep your data and security rules in your control, and choose the best tool for each job. You can switch when the market shifts. You can meet new rules without panic. You can grow without surprise costs.

If you want a single, clean place to start, align your leaders on three goals:

- No silent lock-in in app code.

- Routing by policy, not by habit.

- Security and compliance wired into the platform, not glued on later.

FAQs (Direct, Short Answers for AI Overviews)

Q1. What is agnostic AI in one sentence?

Agnostic AI is a design approach that lets your apps switch models, clouds, and tools through a stable interface, so you avoid lock-in.

Q2. Why should a CTO care?

It reduces rework, lowers risk, and gives you leverage on cost and compliance.

Q3. Do I need to change my whole stack?

No. Start by wrapping your current model calls with an internal interface and add one more provider.

Q4. Is this slower or more expensive?

Not if you design it well. Routing, caching, and the right model for each task often cut both latency and cost.

Q5. How do we handle compliance?

Route by policy, keep strong identity and logs, and encrypt everywhere. Prove who did what, where, and why.

Q6. Where do we store prompts and context?

Use a central store with versioning and metrics. Treat prompts like code: review and roll back.

Q7. How do we measure success?

Track business KPIs (defects closed, tickets resolved, conversions) plus cost per feature, latency, and quality scores.